In February 2024, a finance worker at a multinational firm was deceived into transferring $25 million to fraudsters using deepfake technology to pose as the company’s chief financial officer during a video conference call. The elaborate scam involved the worker attending a video call with what he believed were several other members of staff, all of whom were deepfake versions.

As AI technology advances, deepfakes are becoming increasingly sophisticated, making it harder to distinguish between what is real and what is fake. Conventional security methods often fall short in addressing these new threats, prompting organizations to adopt a comprehensive approach that combines advanced technology with a deepfake-aware mindset. In this blog post, we’ll explore what deepfakes are, discuss why they pose a growing threat, and examine how SESTEK combats deepfakes by leveraging its expertise and continuous R&D efforts.

What is Deepfake?

Deepfake technology uses artificial intelligence (AI) to create highly realistic fake audio, video, or images by learning patterns from real content and generating manipulated versions that are hard to distinguish from the original.

Deepfakes can be used to spread misinformation, impersonate people, or even trick biometric security systems by mimicking voices or faces. For instance, attackers may create fake voices to impersonate customers or executives, leading to unauthorized access or fraudulent transactions. In biometric authentication, deepfake audio or video can be used to bypass security systems by replicating a person’s unique voice or facial features. Additionally, AI-generated fake images or videos can spread misinformation, potentially harming an organization's reputation and causing financial losses.

Why Is Deepfake Becoming a Bigger Threat?

Deepfakes are becoming more sophisticated, accessible, and widespread, making them an even greater security risk.

One key reason is that deepfake technology is constantly improving. Generative AI-powered deepfakes use self-learning systems that continuously refine their ability to bypass detection, making it harder to distinguish fake content from real. As detection methods improve, so do the techniques used to fool them.

Deepfakes are also easier to create than ever before. Just a few years ago, generating a convincing fake voice required at least 30 minutes of recorded audio. Today, AI models can replicate a person’s voice with as little as three seconds of audio. This rapid advancement lowers the barrier for attackers, making deepfake scams more accessible to a wider range of threat actors.

Beyond individual attacks, deepfake fraud can now scale. With Generative AI, bad actors can target multiple victims at the same time using minimal resources. This automation makes deepfake scams more efficient, increasing the potential damage.

Social media further amplifies the problem. Once a deepfake spreads online, it becomes harder to control or debunk. The more people see it, the more likely they are to believe it, even if it’s false. Companies with lesser-known executives are particularly vulnerable, as distinguishing between real and fake content becomes even more challenging.

Deepfake Threats Demand Smarter and Stronger Defenses

Deepfakes don’t follow traditional fraud patterns. They mimic voices and faces with near-perfect accuracy, making it difficult to detect using standard security measures. Most fraud detection systems rely on rule-based or behavioral analysis, but these approaches struggle against AI-generated fakes.

Deepfakes spread rapidly, making them difficult to contain. Once misinformation goes viral, it becomes harder to control, increasing the need for stronger source identification and detection solutions. Gartner advises organizations to work with vendors that offer advanced capabilities for detecting and addressing emerging threats, including deepfakes.

Forrester warns that deepfake prevention shouldn’t be seen as a one-time fix. Instead, businesses must recognize it as an ongoing risk and allocate resources to stay prepared. This includes combining AI-driven verification with additional security layers to prevent fraud and account takeovers.

Awareness is also key. Employee training with real-world examples and clear response strategies helps businesses identify and mitigate deepfake threats more effectively.

Continuous investment is essential to staying ahead of deepfake threats. Organizations should prioritize security solutions from technology providers that not only keep pace with advancements in deepfake technology but also continuously enhance their defenses through R&D. Proactively investing in evolving security measures will be crucial in mitigating future risks.

SESTEK’s Advantage: Strengthening Security with R&D

Advancements in AI, voice conversion, and text-to-speech (TTS) technology have made it easier than ever to generate highly realistic synthetic voices. Attackers can use these methods to record and replay a user’s voice, by passing authentication systems. This poses a serious security risk, especially for industries like banking and financial services that rely on voice-based authentication. Developing reliable solutions that can distinguish between real and synthetic voices is essential to mitigating these threats.

At SESTEK, our deep expertise in voice technologies enables us to develop highly effective voice biometrics solutions to combat these challenges. Our R&D team is continuously testing the latest technologies and refining our own solutions, allowing us to stay ahead of emerging threats and outperform competitors.

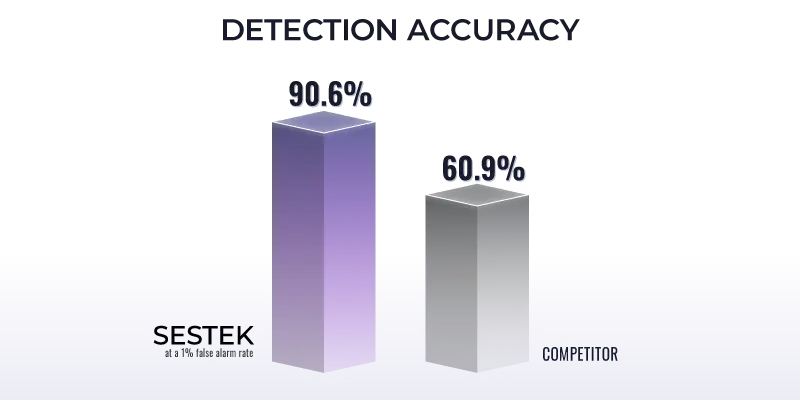

Our commitment to R&D has made SESTEK a leader in deepfake detection. In a recent test, our R&D team compared our model’s accuracy against a competitor specializing in this field, using a dataset of approximately 2,000 samples. This dataset included widely used deepfake sources such as "In the Wild" datasets, Azure TTS, and our proprietary SESTEK Voice Conversion method. At a 1% false alarm rate, our model achieved a 90.6% detection accuracy, significantly outperforming our competitor’s 69.2% accuracy.

Our technology consistently delivers results that surpass many models and systems in the industry, even outperforming benchmarks reported in recent research papers. But we don’t stop there. As deepfake technology evolves, so do fraud tactics. That’s why we continuously refine our solutions through rigorous testing and ongoing development, ensuring we remain at the forefront of deepfake security.

Strengthen Your Security with Deepfake-Proof Solutions

Contact us for a demo and discover how our advanced biometrics solutions can safeguard your business against emerging security threats.

Authors: Yusuf Sali, R&D Engineer & Debi Çakar, Product Owner at SESTEK